Search engine algorithmic changes can sometimes be groundbreaking. Google recently announced the release of a big update to the search algorithm, something which the search engine giant considers to be the biggest ever development in the past 5 years of search and one of the most critical in the entire history of internet search. Specifically, this deals with adopting the new BERT (Bidirectional Encoder Representations from Transformers) neural networking techniques for understanding the intentions behind user queries. The search engine giant says that it can provide more relevant results for 1 in 10 searches in English in the US. But the feature would later be offered in other countries and languages as well. However, Google has released the update globally for featured snippets.

Successful digital marketing involves being updated with, and sometimes even anticipating, these algorithmic changes. It can get overwhelming, but an in-depth understanding of the changes can give a clear picture.

This Algorithmic Update Is Big

Even if only 10% of searches are affected, it certainly is significant since algorithmic updates are by nature quite subtle, with Google sometimes not even reporting them. On that note, this is significant and potentially groundbreaking. Google reckons this update can be felt more with longer and conversational queries. We know that it’s these kinds of queries that can convey more of the true intention of the user. In fact, Google is even reported to prefer users searching with longer phrases these days since they provide a lot more details for Google’s systems to interpret.

Understanding User’s Language the Greatest Challenge for Google

In Google’s blog where the announcement was made, Google’s Vice President of Search Pandu Nayak explains that the greatest challenge for Google Search has been to understand the language used by the searcher, particularly since the searcher need not necessarily know how to phrase the query. Searchers are aware that they’re dealing with a machine here and so they try to phrase the query in a manner they feel is ideal. But Google may not necessarily be able to understand the query the way the user wants it to. That could be because Google has either not been able to form the intended connection between the words, or the phrasing of the words by the user has been inaccurate.

BERT Helps Google Figure out Ambiguous Queries

The BERT machine learning update is intended to deal with such ambiguous queries. The technology behind this change, called BERT, is something Google mentioned last year. Tech Crunch reports that Google did open-source the code for the sake of pre-trained models and for use by other developers.

With machine learning witnessing new deep learning models, Transformers are among the latest developments. Transformers work well in analyzing data containing an important sequence of elements. Sequence-to-sequence learning is something they are associated with. That’s why they can work with search queries, since they involve processing natural language.

First Application of TPU Chips

This is the first time Google will employ its TPU (Tensor Processing Unit) chips for serving search results, which makes the BERT update all the more significant. On paper at least, this is an indication that Google will be able to figure out exactly what you are searching for when you type in your search phrases, and can therefore provide you with search results as well as featured snippets that are more relevant.

The Effect on Search Results

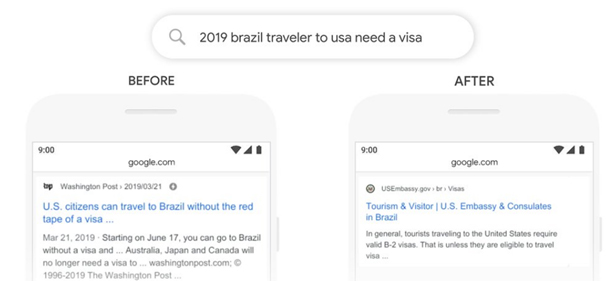

The update has started rolling out, and it’s possible the influence of BERT is already being felt in the search results. So does this actually deliver more precise results in practice? According to Google’s screenshots of some oddly phrased searches, it is working. Here’s one example:

(Img link: https://www.blog.google/products/search/search-language-understanding-bert/)

As the result on the right shows, Google has identified the connection between the word “to” and the rest of the query to figure out that a Brazilian user wants to know about getting to USA and not the other way around, as Google used to understand before BERT’s influence, which is shown at the left.

Google admits that understanding language is the greatest challenge for Search. But with advanced machine learning in the form of BERT neural networking, that connection between words is better analyzed contextually to deliver results that satisfy the intentions of the user.

Experienced healthcare SEO outsourcing providers are fully aware of the potential implications of this groundbreaking update and can ensure their healthcare clients don’t lose their rankings drastically. They can make the required changes too.