Does your website’s search engine index contain an excessive number of pages, which is mainly of no value to users? This is what is referred to as index bloat. It refers to a situation where Google crawlers index excessively low-quality or irrelevant pages on a website, i.e. pages that you don’t want appearing in search results.

When a Google bot scans and indexes a particular website, its goal is to offer users the finest and most pertinent content. However, SEO problems related to index bloat can diminish a website’s authority and its ranking in search engine results in terms of relevance.Outsourcing digital marketing tasks to an experienced search engine optimization company can help with efficient indexing and ranking by the search engine algorithms.

What Causes Index Bloat?

Index bloat occurs when search engines index pages that don’t add value to users, such as filtered product pages with URL parameters, internal search results, printer-friendly versions, thank you pages, test URLs, and thin content. Common culprits include filter combinations, disorderly archives, unrestrained tags, pagination, unruly parameters, expired content, non-optimized search results, auto-generated profiles, tracking URLs, inconsistent HTTP/HTTPS and www usage, and subdirectories that shouldn’t be indexed.

Index bloat can occur for various reasons:

- Frequent updates and deletes: If there are frequent updates or deletions of records in a table, the index may become fragmented, leading to unused or partially filled index pages.

- Inserting duplicate or redundant data: Inserting duplicate or redundant data into a table can cause the index to grow unnecessarily. This can happen when data is not normalized, or when there is poor data quality.

- Unused indexes: Indexes that are created but not used by queries contribute to index bloat. These unused indexes consume storage space without providing any performance benefits.

- Inefficient index design: Poorly designed indexes, such as having too many or too few indexes for a particular table, can contribute to index bloat.

Any website can suffer from index bloat caused by pagination issues, by having both secure and non-secure versions of your website indexed, and by allowing WordPress blog categories, tags, archives, etc. to be indexed by Google and other search engines.

Does Index Bloat Hurt Your SEO?

Yes, index bloat can have negative implications for your SEO (Search Engine Optimization). Even if it doesn’t harm the search rankings of your website’s desirable pages, it will cannibalize your site’s organic search traffic. Once indexed, the undesirable pages will essentially steal the organic search traffic of your website’s desirable pages.

Search engine bots have limited resources and time to crawl and index web pages. Index bloat can force search engines to spend resources on irrelevant or low-quality pages, slowing down the crawl process and potentially causing important pages to be crawled less frequently.

How a SEO Company Can Help to Index Your Site in Google

Diagnosing the Issue

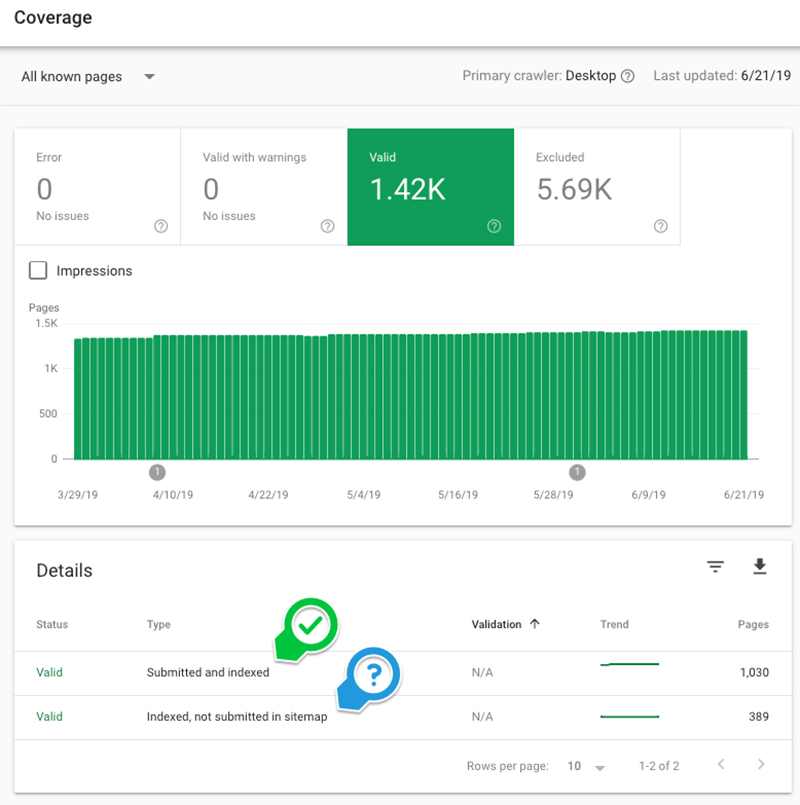

To diagnose index bloat, you need to measure your indexation rate by cross referencing the pages you wish to be indexed against those that Google actually is indexing. Here’s what you need to do through your analysis. If you have good quality content, its indexability must be maintained. Pages with low quality content must be removed from the index through a no index meta tag. The advantage of this analysis is that you can also find good pages you missed when your XML sitemap was created. These can be added to the sitemap to ensure better indexing.

The ideal indexation rate is 100%, where only all the quality pages in your site are indexed, without any unwanted stuff being considered. The pages that are the source for any index bloat to happen usually involve unintentional internally duplicated content, pagination, pages with thin content, and content that under performs.

You can check out your property at the Google Search Console (GSC). Head to the Index Coverage Status report. In the report you’ll find Google showing how many URLs in total it has found in your site.

Here’s an example of the data:

Note down the findings, the number of pages that Google says it is indexing.

You can then do a site:domain.com search on Google and then check out the number of results Google serves from its index. Compare the number with the number you found from your other checks such as the GSC report. Any differences you find are an indication of inefficient indexation. The Google Webmaster guidelines can help you understand the quality criteria Google follows for page indexing. When you compare numeric values from various sources, you can find site pages that have low value.

Pages That Don’t Need to Be Indexed

The major index bloat issue, that is, pages that don’t need to be indexed and which you need to look out for include test pages, thank you pages, custom post types, testimonial pages, author pages, blog tag & blog category pages among others. WordPress sites can use plug-ins such as All in One SEO or Yoast SEO to track such page types.

Figuring out if your XML sitemap has pages that shouldn’t be indexed depends on the kind of site you have and the purpose of those pages. Are they really essential for your site? Are there duplicate pages elsewhere? The examples stated above are usually the kind of pages that need to be avoided.

Google’s indexation can sometimes get extremely tricky. SEOs have experienced that. So, some tweaking and analysis are essential here. That’s because indexing and crawling can sometimes be two different things. Google needn’t necessarily index all the stuff it crawls or show all it has indexed. That’s one of the reasons behind the unpredictability of search results.

How Do You Fix Index Bloating?

- Deleting Pages for Higher Search Rankings

Does the concept of deleting pages to perform better in search rankings ring a bell? It seems as revolutionary a concept as some of the others out there, conceived just to grab attention. But while – on the face of it – the concept seems strange, there is some real reasoning behind it, in the context of index bloat. If your website has a number of outdated pages you no longer use, you can delete them and benefit from fewer thin content pages, fewer redirects and 404s, and lesser chance for error and misinterpretation from search engines. By limiting the options given to search engines, you can reduce the control you give them and gain more control on your site and your search engine optimization efforts.

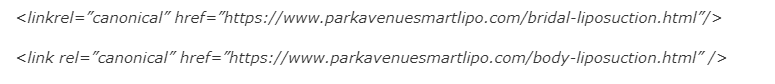

- Canonical Tag

A canonical tag refers to a special code that can enable the search engine bots to distinguish between a page’s preferred version and a very similar-looking page. When you place a canonical tag in your site’s header, you indicate to the bot that it should only index the preferred page version.

You need to place the tag on the version of the page that is not preferred. It should contain a link to the preferred version. Here’s the format:

Canonical tags only have to do with the crawling and indexing aspects of the search bot, and do not affect user interactions while they’re browsing your site. Here are 2 examples:

- Redirects

Redirects are another option, in cases where the index for a site gets bloated because of old pages that resolve as 404 error pages while clicking on a link. If a site has changed the folder structure for these pages, the old and the new URLs could currently be indexed. The solution here is to redirect visitors to the new page from the old one, so that their user experience is uncompromised.

- Pagination

Let’s look at pagination. Pagination basically refers to a kind of duplicate content occurring due to long content which spills to more than page 1. It could also be a product list that spreads to more than a page. That would mostly have duplicate Meta descriptions and title tags. To deal with that, you need to state the relationship between those consecutive pages having similar content. This can be done by adding a special code to the pages that are connected, rel=”””” or rel=”prev”. This will help the search bot to recognize that these pages are related.

- URL Removal Tool

You can use the URL Removal Tool in Google Search Console to get rid of unwanted pages. This tool allows you to request Google to remove specific URLs from its index. These requests are typically processed within the same day they are requested. However, this is only a temporary solution because if you don’t take measures such as those mentioned above to prevent unwanted pages from being indexed again in the future, they will return to Google’s index when the search engine crawls your website in the future.

Index Bloat Prevention Tips

- Plan website structure carefully to avoid unnecessary pages

- Regularly review and update content

- Monitor indexed pages in search console

- Use clear and consistent URL structures

- Use the ‘noindex’ meta tag for unused pages

- Optimize pagination with rel=”prev” and rel=”next” tags

- Avoid diluting page authority with high-quality content

- Properly handle HTTP to HTTPS transitions

Implementing these tips will contribute to a cleaner and more efficient website, reducing the risk of index bloat and enhancing your SEO efforts. With professional digital marketing services, businesses can get the index bloat issue dealt with and ensure their website performs well in search rankings, delivering good ROI.

Explore our SEO services!

Let’s transform your website into a lean, high-performing machine.