Every enterprise aims at improving organic search traffic to their website to grow their business. According to some research, 53 percent of website traffic comes from organic search. But for this to happen, your website should show up in the search results. There are two ways to get your website indexed by search engines like Google, Bing etc. One is the tortoise approach where you wait for it to happen naturally, which takes a lot of time and the next method is to spend some time and energy improving conversion rate, social presence, and promoting your informative content by investing in a search engine optimization company.

Why Should Google Index Your Website?

As mentioned earlier, if you want your website to show up in the search results, Google should index your website. Google uses spiders that crawl your website to get indexed, so it is important to ensure that your website has a good crawl rate. The spider’s job is to search the web for new content and therefore, you must update the version of your site that has previously been indexed. A new page on an existing site, an update to an existing page, or a completely new site or blog can all be considered “new content”. When a spider discovers a new site or page, it must determine what that site or page is about. If the spiders are unable to inform the search engines that your pages exist, they will not appear in search results. In simple terms, indexing is the processing of data that the search engine spiders have gathered while crawling the web. Your search results will improve if you index frequently.

The spider takes note of new documents and alterations, which are then uploaded to Google’s searchable index. Those pages are only added if they have high-quality content and don’t raise any red flags by employing unethical practices such as keyword stuffing or developing a large number of links from questionable sources.

10 Ways to Get Indexed by Google

When you publish a new post or page, you want Google to know that you have added something, and you want the search engine spider to crawl and index your page or website. So, here are a few steps:

- Eliminate crawl blocks in your robots.txt file: Sometimes your website may not get indexed due to crawl block in robots.txt file. To verify this you must go to yourdomain.com/robots.txt. The following two snippets of code may appear

| 1 | User-agent: Googlebot |

| 2 | Disallow: / |

| 1 | User-agent:* |

| 2 | Disallow: / |

Both of these show that the spiders are not allowed to crawl any pages on your website. You can fix this issue simply by removing them. If Google isn’t indexing any web pages, a crawl block in robots.txt could be the cause. Paste the URL into Google Search Console’s URL inspection tool to see if this is the case. For more information, click the Coverage block, then look for the “Crawl allowed?”. No: robots.txt has blocked you.” This shows that the page has been blocked by the robots.txt file. If this is the case, examine your robots.txt file for any “disallow” rules that pertain to the page

- Avoid rogue noindex tags: If you don’t want Google to index any page, then you can stop Google from doing so. This can be done in two ways:

- Meta Tag: Any page that has any of the following tags in their <head> section will not get indexed by Google:

1 <meta name= “robots” content= “noindex”>

1<meta name= “googlebot” content= “noindex”>

To find all the pages that have “noindex” metatag, you can run a crawl with Ahref’s site audit tool and check for Noindex page warnings. Check the pages that are affected and remove the noindex meta tag. - X- Robots- Tag: This is the second method. The XRobots-Tag HTTP response header is likewise respected by crawlers. This can be done with a server-side programming language like PHP, or by editing your .htaccess file, or modifying your server setup.The URL inspection tool in Search Console will notify you if this header is preventing Google from crawling a page. Simply input your URL and check the “Indexing allowed?” box. No: ‘noindex’ detected in the ‘XRobots-Tag’ http header”. If you want to check this issue for your entire website, then run Ahref’s Site Audit tool and instruct your developer to exclude pages you want indexing from returning this header.

- Meta Tag: Any page that has any of the following tags in their <head> section will not get indexed by Google:

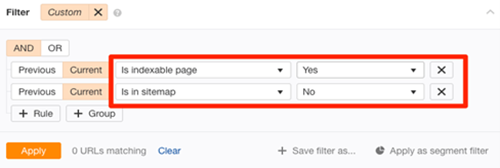

- Your page should be included in your sitemap: Google uses a sitemap to determine which pages on your site are important and which are not. It may also provide information on how frequently they should be crawled. Even if Google may be able to identify pages on your website whether or not they’re in your sitemap, it’s still a good idea to include them. Use the URL inspection tool in Search Console to see if a page is in your sitemap. It’s not on your sitemap or indexed if you get the “URL is not on Google” error and “Sitemap: N/A.” If you want to find all the crawlable and indexable pages that are not in your sitemap, then run a crawl in Ahref’s Site Audit. You can go to Page Explorer and use the filter shown below:

These pages should be in your sitemap, so add them. Once done, let Google know that you’ve updated your sitemap by pinging this URL:

http://www.google.com/ping?sitemap=http://yourwebsite.com/sitemap_url.xml

- Exclude rogue canonical tags: A canonical tag tells Google which version of a page is favored. This is what it looks like

href=”/page.html/”>link rel=”canonical” href=”/page.html/”>

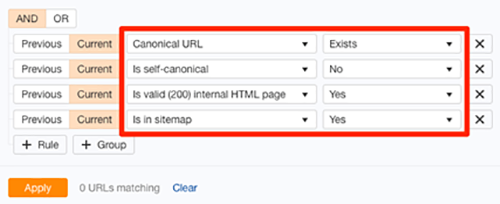

The majority of pages have either no canonical tag, or a self-referencing canonical tag. This informs Google that the page is the preferred and most likely the only version. To put it another way, you’d like this page to be indexed. However, if your page contains a rogue canonical tag, it may inform Google about a preferred version of this page that does not exist. If this is the case, your page will not be indexed. Use Google’s URL inspection tool to look for a canonical. If the canonical tag refers to another page, you’ll get an “Alternate page with canonical tag” notice. If you don’t want the warning page and index the page, then remove the canonical tag. An easy method to find rogue canonical tags in your website is to run Ahref’s Site Audit tool. Go to Page Explorer and use the setting as shown below:

- Verify whether any of your pages is orphaned: Orphan pages are ones that have no internal links to them. Because Google discovers new information by crawling the web, orphan pages cannot be discovered in this way. Visitors to the website won’t be able to find them either. Crawl your site with Ahrefs’ Site Audit tool to look for orphan pages. Next, look for “Orphan page (has no inbound internal links)” errors in the Links report. It shows pages that are indexable and present in your sitemap and have no internal links. If you are not confident about all the pages that you want to be indexed in your sitemap, then follow these steps:

- Download a full list of pages on your site (via your CMS)

- Crawl your website (using a tool like Ahrefs’ Site Audit)

- Cross-reference the two lists of URLs

If any of the URLs were not found during the crawl, then those pages are orphan pages and you can fix them in one of the following two ways:

- If the page is unimportant, delete it and remove from your sitemap

- If the page is important, incorporate it into the internal link structure of your website

- Fix nofollow internal links: Links using the rel=”nofollow” tag are nofollow links. They prevent PageRank from being transferred to the destination URL. Nofollow links are also not crawled by Google. Here’s what Google has to say about it: Essentially, utilizing nofollow causes the target links to be removed from our total web graph. If other sites link to them without employing nofollow, or if the URLs are submitted to Google in a Sitemap, the target pages may still remain in our index. To summarize, make sure that every single internal link to indexable pages are followed. Crawl your site with Ahrefs’ Site Audit tool to achieve this. Look for indexable pages with “Page has nofollow incoming internal links only” issues in the Links report.

- Include strong and powerful internal links: Crawling your website allows Google to find new content. They may not be able to find the page in question if you fail to include an internal link to it. Adding some internal links to the page is a simple solution to this problem. This can be done from any web page that Google can crawl and index. If you want Google to index the page as quickly as possible, though, you should do so from one of your more “strong” pages because such pages are more likely to be re-crawled by Google than less essential pages. You can do this using Ahref ‘s Site explorer, just type your domain and visit the best by links report. It will show the most authoritative page first, you can check that list and look for relevant pages from which to add internal links to the page in question.

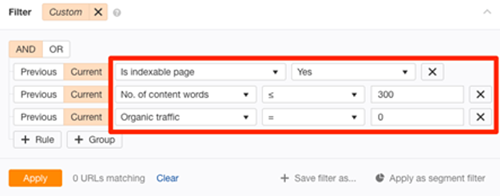

- Ensure that the page is unique and valuable: Google does not index low-quality pages as it provides no value to the user. Your website or web page must be “great and motivating” if you want Google to index it. If you’ve ruled out technical reasons for the lack of indexing, it’s possible that a lack of value is to blame. So, it’s worthwhile to go over the page again with fresh eyes and ask yourself, “Is this page truly valuable?” If a user clicked on this page from the search results, would they find it useful?”. If you answered no to either of those questions, your material needs to be improved. Using Ahrefs’ Site Audit tool and URL Profiler, you can uncover more potentially low-quality pages that aren’t indexed. You can go to Page Explorer in Ahref’s Site Audit to change the settings as shown below:

This will return “thin” pages that are indexable but have little organic traffic at the moment. To put it another way, there’s a good probability they aren’t indexed. After exporting the report, paste all of the URLs into the URL Profiler and check for Google indexation. Check for quality issues on any pages that aren’t indexed. If necessary, make improvements before requesting re-indexing using Google Search Console. You should also try to resolve duplicate content concerns. Duplicate or near-duplicate pages are unlikely to be indexed by Google. Check for duplicate content issues with Site Audit’s Duplicate Content report.

- Take out low quality pages: Having an excessive number of low-quality pages on your website wastes the crawl budget. According to Google, the Crawl budget […] is not something most publishers have to worry about, and also added that “if a site has fewer than a few thousand URLs, it will be crawled efficiently most of the time.” Still, it’s never a bad idea to remove low-quality pages from your website. It can only be beneficial to the crawl budget. You can use our content audit template to identify pages that are potentially low-quality or irrelevant and can be removed.

- Develop strong quality backlinks: Backlinks inform Google about the importance of a web page. After all, if others are linking to it, it must be worthwhile. This is a list of pages that Google would like to index. Google does not merely index websites with backlinks for complete transparency. There are a lot of indexed pages (billions) that have no hyperlinks. Since Google considers pages with high-quality links to be more significant, they’re more likely to crawl and re-crawl them faster than pages without such links. As a result, indexing takes less time.

All the above-mentioned tips help businesses to index their website on Google. You can also reach out to an SEO outsourcing company to implement all the optimizing techniques that can help to index your website.