Want to know how users and search engines interact with your site, the most active pages in your site that drive organic traffic, and the number of SEO visits to your site?

The answer is there in your site’s log file.

So, what is a log file?

A log file is a server.

Image source

Log file records are collected and stored by the web server of the site, for a certain period of time. Professional digital marketing services in New York can help in analyzing these records to improve your site’s SEO performance.

What does the log file include?

Within a log file, you can discover many data including:

- Search engine type and search term entered

- The URL of the page or resource being requested

- HTTP status code of the request

- IP Address and hostname

- Country or region of origin

- A timestamp of the hit

- The browser and operating system used

- Duration and number of pages visited by the user

- Page on which the user has left the website

- The user agent making the request

- Time is taken to download the resource

How does log file analysis impact SEO?

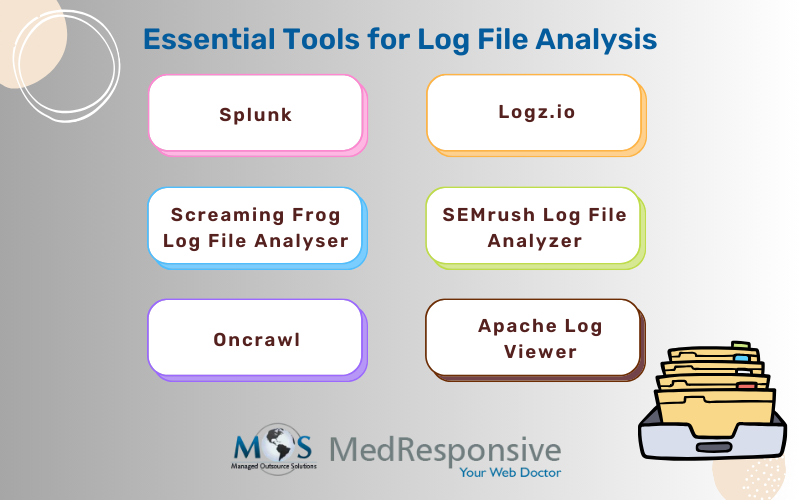

Log file analysis is one of the top technical SEO strategies that provide insights on the crawling and indexing of web pages. Log file analysis is significant for SEO, as webmasters can process and analyze relevant data regarding the usage of their website. For larger websites, the log file analysis can include the processing of very large amounts of data, which in the long term requires a powerful IT infrastructure.

Regular log file analysis helps SEO professionals to clearly understand how search engines crawl their website and discover valuable insights to make data driven decisions.

For SEO purposes, analysis of log files –

- Helps understand crawl behavior

- Shows how much “crawl budget” is being wasted and where

- Improve accessibility errors such as 404 and 500 errors

- Allows locating pages that aren’t being crawled often

- Identifies static resources that are being crawled too much

- Helps find out whether the new content added has increased bots’ visits

- Checks the URLs or directories that are not often crawled by bots

- Helps determine how your crawl budget is spent

- Alerts on what pages are loading slowly

Analyzing Log Files for SEO – Best Ways

Keep an eye on crawl budget

Wasting crawl budget can impact your site’s SEO performance, as search engines won’t be able to crawl your website efficiently. A crawl budget refers to the number of pages a search engine will crawl within a certain timeframe. Crawl budget is calculated on the basis of crawl limit (crawling that a website can handle) and crawl demand (URLs that are worth crawling the most, how often it’s being updated).

In case of crawl budget wastage, search engines won’t be able to crawl the website efficiently. Wasting crawl budget hurts your SEO performance. Tools like Google Search Console can provide insights into your website’s crawl budget for Google. Its Crawl Stats Report allows seeing crawl requests from Googlebot for the last 90 days. Some of the common reasons for wasted crawl budget are – poor internal link structure, duplicate or low quality content, accessible URLs with parameters, broken links, a large number of non- indexable pages.

Check for internal links

Combining log file data with internal link statistics from a site crawl provides details about both the structure and discoverability of pages. If it is found that Google is crawling a large number of broken pages on your site, make sure that you don’t have any internal links to these broken pages, and clean up any redirecting internal links.

Also, if orphan pages are there, which do not connect to any internal or external links, consider correcting the issue by pointing to any other pages from the concerned page. At the same time, consider reworking pages that are not being crawled at all and eliminate any errors.

Improve your site speed

Often, page load times above two seconds can increase the bounce rates. Improving your page speed and navigation can lead to search bots crawling more of your site’s URLs. Page size and use of large images can also affect crawling. Page speed is an important SEO ranking factor, and it can affect how search engines crawl your site. For faster loading pages, search engine bots have enough time to visit and index the pages in your site. A log file analysis will share insights on what pages are loading slowly and which ones are large. Consider optimizing and reducing the size of large pages, or reducing the number of high-resolution images, compressing images on pages.

Listen to our podcast – Ways to Speed Up Your Website for SEO Purposes

Limit Duplicate Content

Duplicate content refers to the same content that appears at multiple locations (URLs) on the web, and as a result search engines will be confused as to which URL to show in the search results. If you find duplicate URLs, opt for the rel=canonical tag or consider removing such pages with the support of organic SEO services.

Read our blog: How To Avoid Duplicate Content In SEO

For SEO, it is important to monitor whether optimized pages are indexed by bots or not.

Log file records information about the performed requests. Analyze them to check whether your SEO efforts are worthwhile.

Need support with technical SEO? Consider associating with a professional digital marketing agency in the U.S.

Get in touch with the MedResponsive team today.